The Hidden Human Cost of "Safe" AI: How Tech Giants Outsource Trauma

Every time ChatGPT refuses to generate harmful content, every time your social media feed is free of graphic violence, there's a human cost you never see.

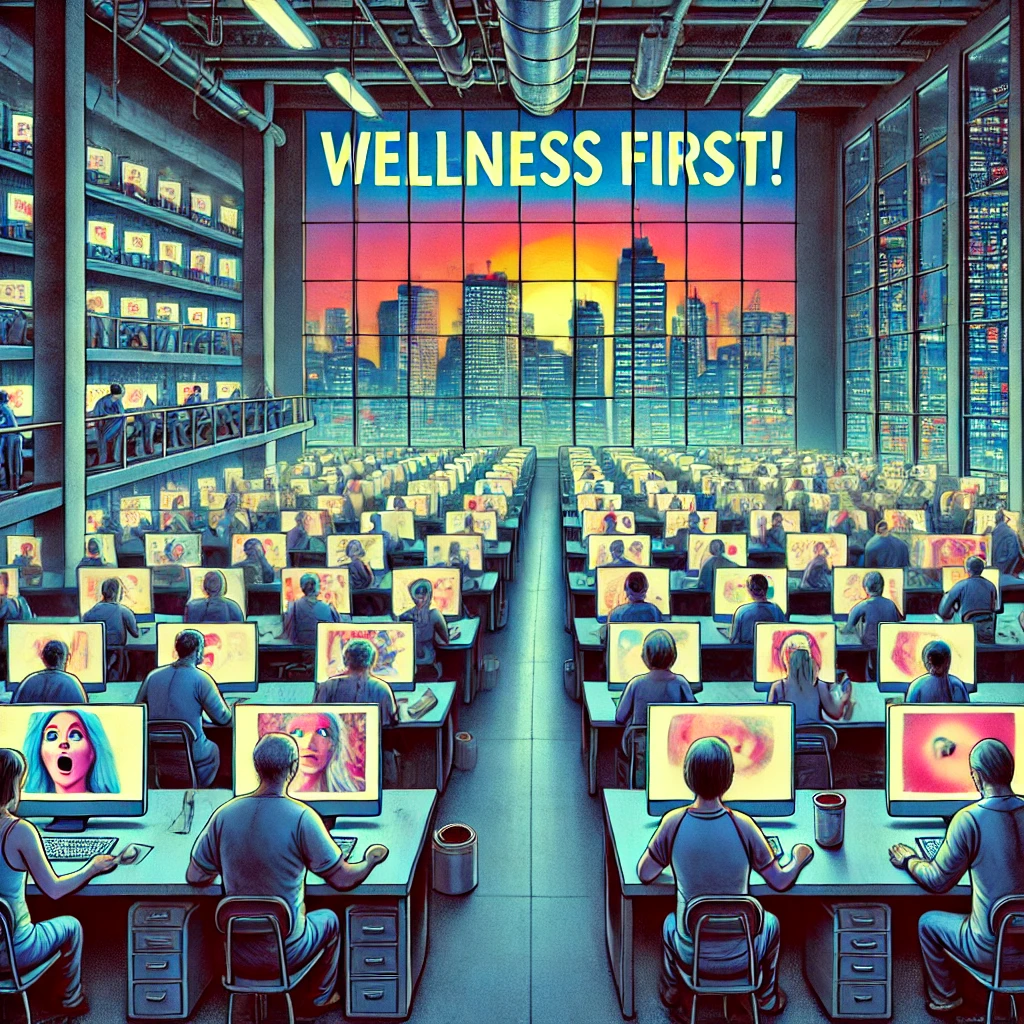

The Invisible Workforce

We celebrate AI breakthroughs. We marvel at how ChatGPT won't write hate speech, how Facebook filters out violence, how TikTok keeps children safe. We assume it's all algorithms and machine learning magic.

It's not.

Behind every "safe" AI system, behind every moderated social media platform, there's an army of human workers you've never heard of. They're called content moderators and data labelers. And right now, thousands of them are sitting in offices in Kenya, the Philippines, India, Turkey, and Colombia, watching the worst things humans have ever created.

What the Job Actually Entails

Imagine your workday:

You arrive at 8 AM. You sit at a computer. For the next nine hours, you watch videos and read text describing:

-

Children being sexually abused

-

People being murdered, often slowly

-

Graphic suicides and self-harm

-

Bestiality

-

Torture

-

Terrorist beheadings

-

Necrophilia

You must watch these. All of them. Hundreds per day. You label them, categorize them, rate their severity. This is how AI learns what "harmful content" looks like. This is how the safety guardrails get built.

You're not allowed to look away. You have quotas. Performance metrics. If you're too slow, you lose your bonus. If you're not accurate enough, you get fired.

Your wellness counselor asks "How are you feeling?" once a month. They're not a psychiatrist. Sometimes they're not even qualified counselors.

You earn between $ 1.32 and $ 2 per hour.

The Scale of the Trauma

81% were diagnosed with severe PTSD.

Let that sink in. Not "some anxiety." Not "work-related stress." Severe post-traumatic stress disorder—the same condition that affects combat veterans and first responders.

The symptoms they reported:

-

One moderator developed trypophobia after repeatedly seeing maggots crawling from a decomposing hand

-

Workers smashing bricks against their houses

-

Biting their own arms

-

Being scared to sleep

One worker who helped train ChatGPT told reporters: "However much I feel good seeing ChatGPT become famous and being used by many people globally, making it safe destroyed my family. It destroyed my mental health. As we speak, I'm still struggling with trauma."

His wife left him after watching him change from the work.

It's Not Just One Company

This isn't a Meta problem or an OpenAI scandal. It's systemic across the entire tech industry:

Meta/Facebook employs over 15,000 content moderators globally through third-party contractors in Kenya, Philippines, India, paying as little as $1.50/hour.

OpenAI paid Kenyan workers through Sama between $1.32-$2 per hour to filter toxic content for ChatGPT. When the project was done, Sama closed its Nairobi office, rendering all 200 workers jobless.

TikTok uses Telus Digital in Turkey, where 1,000 workers review disturbing content. When they tried to unionize in 2024, at least 15 were fired.

Google, Amazon, Microsoft use vendors like Appen for data-labeling jobs paying as little as $1.77 per task.

YouTube and Twitter rely heavily on Philippine-based contractors reviewing the worst content on the internet.

The Outsourcing Shell Game

Here's how tech companies maintain plausible deniability:

-

Hire third-party firms like Sama, Telus, Cognizant, Accenture, Majorel

-

Locate them in countries with weak labor laws (Kenya, Philippines, India, Colombia, Turkey)

-

Pay the outsourcing firm well (OpenAI paid Sama $12.50/hour per worker)

-

Let the outsourcing firm pay workers poverty wages ($1-2/hour)

-

Maintain legal distance from the actual working conditions

When Facebook settled a $52 million lawsuit with over 10,000 US content moderators who suffered PTSD, workers in Asia and Africa were entirely left out.

The geographic pattern is deliberate: exporting trauma along old colonial power structures, away from the US and Europe toward the developing world.

The Retaliation

What happens when these workers try to organize?

In Kenya, when 260 content moderators working for Meta through Samasource raised concerns about pay and working conditions, they were all made redundant. Mass firings as punishment.

In Turkey, when Telus workers achieved the required threshold for union recognition in 2024, the company:

-

Fired at least 15 union activists

-

Changed its legal industry classification to block the union

-

Followed union leaders with private security

-

Reported organizers to police

This pattern repeats in Colombia, the Philippines, and everywhere these centers operate.

The Illusion of Care

Tech companies tout their "wellness programs." Here's the reality:

-

"Counselors" who aren't qualified mental health professionals

-

Mandatory monthly check-ins that ask superficial questions

-

Unlimited counseling access—but if you use it, you can't meet your productivity quotas

-

Blur filters and "wellness breaks"—that count against your performance metrics

-

Requests for actual psychiatric care systematically ignored

One moderator in Turkey said: "We can take wellness breaks—but if we do, we can't meet our accuracy targets."

The support systems exist to create the appearance of care while maintaining the brutal production quotas that maximize profit.

The AI Training Connection

Here's what makes this especially relevant now: RLHF (Reinforcement Learning from Human Feedback)—the process that makes AI "safe"—uses the exact same traumatic labor.

When you train an AI model to refuse harmful requests, someone has to:

-

Generate examples of harmful content

-

Label how harmful each example is

-

Review the AI's responses to harmful prompts

-

Do this thousands of times

Every safety feature in ChatGPT, Claude, Gemini, and other AI systems was built on human exposure to the content those systems now refuse to generate.

What We're Really Paying For

When we celebrate AI safety, we need to ask: safety for whom?

The AI is safe. Users are safe. Platforms are safe from liability. Investors are safe from reputational damage.

But the workers? They're left with:

-

Severe PTSD (81% diagnosis rate in one study)

-

Destroyed relationships

-

Poverty wages ($1-2/hour vs. $30+ for the same work in the US)

-

No career prospects (often blacklisted after trying to organize)

-

Lifetime psychological trauma

And when they speak up, they're fired. When they organize, companies lawyer up. When they sue, settlements exclude non-Western workers.

The Workers Are Fighting Back

In April 2025, content moderators from nine countries formed the first-ever Global Trade Union Alliance of Content Moderators in Nairobi, Kenya.

They're demanding:

-

Living wages that reflect the job's difficulty

-

Exposure limits to reduce direct contact with traumatic content

-

Realistic productivity quotas (elimination of quotas for the most egregious content)

-

Mandatory trauma-informed training

-

Accessible 24/7 counseling that continues post-contract

-

The right to unionize without retaliation

Their message to tech companies: "You can't outsource responsibility."

What You Can Do

Acknowledge the hidden cost. Every time you use AI, every time you scroll safely through social media, someone paid the psychological price.

Support the workers. The Global Trade Union Alliance needs visibility and solidarity.

Demand transparency. Ask tech companies:

-

Who moderates your content and trains your AI?

-

What are they paid?

-

What mental health support do they receive?

-

What happens when they try to organize?

Push for regulation. Content moderation must be recognized as hazardous work with appropriate protections, like mining or emergency response.

Question the AI hype. When companies brag about AI safety, ask about the humans who made it "safe."

The Bottom Line

We've built a digital economy that depends on psychological exploitation. We've created "ethical AI" on the backs of traumatized, underpaid workers in the Global South. We've automated the credit while maintaining human suffering as the infrastructure.

Tech companies know exactly what they're doing. They pay outsourcing firms $12.50/hour while workers receive $1.50. They locate operations in countries with minimal labor protections. They maintain legal distance through complex contractor chains. They fire workers who organize.

This isn't a bug in the system. It's the business model.

The next time you see ChatGPT refuse a harmful request, remember: someone in Nairobi read that horror so you wouldn't have to. They're probably still having nightmares about it. They were probably paid less than the cost of your coffee.

And when they asked for help, they were told their trauma was the cost of "lifting people out of poverty."

The exploitation is real. The trauma is documented. The time for change is now.

References & Key Sources

Major Investigations

CNN - Facebook PTSD Diagnoses in Kenya (December 2024)

https://www.cnn.com/blog/businessfacebook-content-moderators-kenya-ptsd-intl

Breaking: 144 content moderators diagnosed with PTSD; 81% diagnosis rate

TIME - Inside Facebook's African Sweatshop (February 2022)

https://time.com/6147458/facebook-africa-content-moderation-employee-treatment/

Comprehensive investigation into Sama's Kenya operations for Meta

TIME - OpenAI Used Kenyan Workers on Less Than $2 Per Hour (January 2023)

https://time.com/6247678/openai-chatgpt-kenya-workers/

Exposé on ChatGPT training conditions and worker exploitation

The Bureau of Investigative Journalism - TikTok Workers Sue Employer Over Union-Busting (March 2025)

https://www.thebureauinvestigates.com/stories/2025-03-15/tiktok-workers-sue-employer-over-union-busting-firings

Turkish Telus workers fight back against retaliation

Rest of World - The Despair and Darkness of People Will Get to You (April 2023)

https://restofworld.org/2020/facebook-international-content-moderators/

Asian moderators left out of Facebook's $52 million settlement

Rest of World - TikTok Moderators in Turkey Fight Trauma, Burnout, Union-Busting (August 2025)

https://restofworld.org/2025/tiktok-moderators-turkey/

On-the-ground reporting from Telus operations in Izmir

Slate - A ChatGPT Contractor Says He's Still Traumatized (May 2023)

https://slate.com/technology/2023/05/openai-chatgpt-training-kenya-traumatic.html

First-person testimony from OpenAI content moderator

CBS News 60 Minutes - Kenyan Workers with AI Jobs (November 2024)

https://www.cbsnews.com/news/ai-work-kenya-exploitation-60-minutes/

Investigation into AI sweatshops; wage discrepancies revealed

The Washington Post - Content Moderators See the Worst of the Web (July 2019)

https://www.washingtonpost.com/technology/blog/social-media-companies-are-outsourcing-their-dirty-work-philippines-generation-workers-is-paying-price

Early reporting on Philippines outsourcing for YouTube, Facebook, Twitter

Legal & Labor Rights Reporting

International Bar Association - You Can't Outsource Responsibility

https://www.ibanet.org/You-cant-outsource-responsibility

Legal analysis of the Motaung case and Foxglove's advocacy

International Institute for Human Rights and Business - Content Moderation Factory Floor (August 2025)

https://www.ihrb.org/latest/content-moderation-is-a-new-factory-floor-of-exploitation-labour-protections-must-catch-up

Policy analysis on cross-border labor exploitation

Business & Human Rights Resource Centre - OpenAI and Sama

https://www.business-humanrights.org/en/latest-news/openai-and-sama-hired-underpaid-workers-in-kenia-to-filter-toxic-content-for-chatgpt/

Documentation of labor rights violations

MediaNama - Kenyan Workers Petition Parliament (July 2023)

https://www.medianama.com/2023/07/223-kenyan-workers-call-for-investigation-into-exploitation-by-openai-3/

Workers' formal complaint to Kenyan National Assembly

Worker Organizing & Union Actions

UNI Global Union - Content Moderators Launch Global Alliance (April 2025)

https://uniglobalunion.org/news/moderation-alliance/

Announcement of first-ever global trade union for content moderators

UNI Global Union - Mental Health Protocols Demanded (June 2025)

https://uniglobalunion.org/news/tech-protocols/

Release of "The People Behind the Screens" policy report

UNI Global Union - Telus Union Busting in Turkey (March 2025)

https://uniglobalunion.org/news/new-firings-at-telus-in-turkiye-renew-calls-to-stop-union-busting/

Ongoing campaign against Telus retaliation

Context/Thomson Reuters Foundation - Content Moderators Unite to Tackle Mental Trauma (July 2025)

https://www.context.news/big-tech/content-moderators-for-big-tech-unite-to-tackle-mental-trauma

EU regulations and worker protection efforts

Labor Notes - TikTok Moderators Fight Against Trauma and for a Union (April 2025)

https://labornotes.org/blogs/2025/04/tiktok-moderators-fight-against-trauma-and-union

Labor movement analysis and solidarity campaigns

Academic & Research Sources

Leverhulme Centre for the Future of Intelligence - Emotional Labor Offsetting (January 2025)

https://www.lcfi.ac.uk/news-events/blog/post/emotional-labour-offsetting

Academic analysis of racial capitalist fauxtomation in content moderation

Coda Story - In Kenya's Slums, They're Doing Our Digital Dirty Work (August 2025)

https://www.codastory.com/authoritarian-tech/the-hidden-workers-who-train-ai-from-kenyas-slums/

Investigative journalism on AI training conditions

CMSWire - He Helped Train ChatGPT. It Traumatized Him (May 2023)

https://www.cmswire.com/digital-experience/he-helped-train-chatgpt-it-traumatized-him/

Technical analysis of RLHF labor practices

Additional Reading:

For ongoing coverage, follow: - UNI Global Union (international labor federation): https://uniglobalunion.org - Foxglove (tech justice organization): https://www.foxglove.org.uk - Turkiye Today reporting on Telus workers: https://www.turkiyetoday.com - Business & Human Rights Resource Centre tracker: https://www.business-humanrights.org

Comments (0)

No comments yet. Be the first to comment!

Leave a Comment